Reinforcement learning and its methods

What Is Reinforcement Learning?

Teaching machine learning algorithms to make a variety of decisions is known as Reinforcement learning. To achieve a condition in which the machine learns from interactions with the environment and shapes itself based on how the environment responds, reinforcement learning involves the machine interacting with a difficult environment. This is comparable to kids learning about the world and taking the steps that will enable them to accomplish a certain life goal.

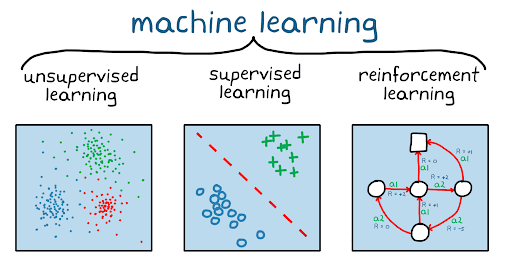

The Difference Between Supervised, Unsupervised & Reinforcement Learning?

Given that both supervised and reinforcement learning techniques map the input to output, it is important to emphasize that, in contrast to supervised learning, which provides feedback in the form of the right sequence of actions to perform a task, reinforcement employs rewards and punishments as a sign for positive and negative behavior. The distinction between reinforcement learning and unsupervised learning comes down to what each approach hopes to accomplish. Unsupervised learning looks for similarities and contrasts between data sets, as we explained in our essay, whereas reinforcement learning looks for an appropriate action model that maximizes the agent’s rewards.

Types Of Reinforcement Learning

There are two types of reinforcement learning methods: specifically, they are positive reinforcement and negative reinforcement.

- Positive reinforcement: In learning, positive reinforcement involves rewarding or adding something when a desired behavior occurs, thereby increasing the probability of that behavior being repeated in the future. For example, a parent gives his children praise (reinforcing stimulus) for doing homework (behavior).

- Negative reinforcement involves increasing the chances of specific behavior occurring again by removing the negative condition. For example, when someone presses a button (behavior) that turns off a loud alarm (aversive stimulus).

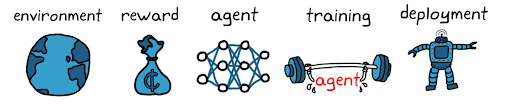

Elements Of Reinforcement Learning

Furthermore, Aside from the agent and the environment, reinforcement learning requires four critical components: policy, reward signal, value function, and model.

Policy.

A policy explains how an agent acts at a specific time. The policy might be as straightforward as a lookup table or a basic function, but it can also entail complicated function computations. The agent’s observations are primarily based on the policy.

Reward signal.

At each stage, the agent instantly receives a signal from the environment known as the reward signal or simply reward. The agent’s activities might determine whether rewards are advantageous or disadvantageous, as previously mentioned.

Value function.

The value function describes the value of particular acts and the potential reward for the actor. Both the agent’s policy and the reward influence the value function, which aims to estimate values for maximizing rewards.

Some Of The Most Used Reinforcement Learning Algorithms

Reinforcement learning algorithms, predominantly employed in AI and gaming applications, fall into two categories: model-free RL algorithms and model-based RL algorithms. Q-learning and deep Q-learning are examples of model-free RL algorithms.

Q-Learning.

This is an Off-policy RL algorithm for temporal difference Learning. The temporal difference learning methods compare temporally successive predictions. It learns the value function Q (S, a), which means how good to take action “a” at a particular state “s.”

The below flowchart explains the workings of Q-learning:

Monte Carlo Method.

One of the greatest methods for an agent to determine the appropriate policy to maximize the cumulative reward is the Monte Carlo (MC) approach. This approach is only appropriate for story-driven projects with a clear conclusion.

Using this strategy, the agent gains knowledge directly from episodes of experience.

SARSA

As a result, it develops its understanding of the value function depending on the present activity, which is a result of the current policy.

When updating the Q-value, SARSA takes into account the agent’s present state (S), the action they choose to take (A), the reward they receive for taking that action (R), the state they enter after taking that action (S), and the action they do in that new state (S) (A).

Deep Q Neural Network (DQN)

As suggested by its name, DQN is a Q-learning algorithm that uses neural networks. In a vast state space context, defining and updating a Q-table will be a challenging and complex operation. A DQN algorithm can resolve such an issue. The neural network estimates the Q-values for each action and state rather than constructing a Q-table.

Real-World Applications Of Reinforcement Learning

- RL is used in Robotics such as Robot navigation, Robo-soccer;

- RL can be used for adaptive control such as factory processes, admission control in telecommunication;

- RL can be used in Game playing such as tic-tac-toe, chess, etc.

- In various automobile manufacturing companies, robots use deep reinforcement learning to pick goods and put them in containers.

- Business strategy planning, Data processing, and Recommendation systems.